,allowExpansion)

Voice Control in Smart Industry using Aloha Tool Flow

Robots and machinery Collaboration in an Industrial Environment.

In the framework of ALOHA H2020 project

In the framework of ALOHA H2020 project, Concept Reply realized an embedded keyword spotting system based on deep learning techniques to control collaborative robots and machinery in an industrial environment. The voice control runs at the edge rather than in the cloud, so there are no concerns about response latency, data security, access control, user privacy and legal risk.ncidunt interdum.

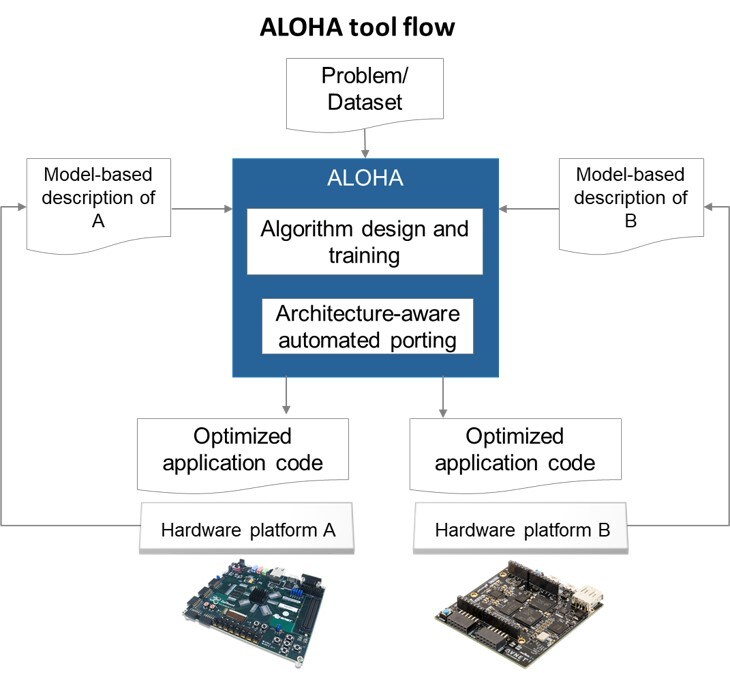

Learning to edge in a unique framework

Deep learning algorithms are very promising in the speech recognition domain, achieving high performance even in noisy environments such as the industrial ones, where users demand high accuracy because they do several tasks in parallel. However, applications in the smart industry domain require a shift to the edge paradigm, in order to ensure real time processing as well as data security, and in turn programming for low-power architectures requires advanced skills and significant effort.

Aloha proposes an integrated tool flow that facilitates the design of deep learning applications and their porting on embedded heterogeneous architectures. The framework supports architecture awareness, considering the target hardware since the initial stages of the development process to the deployment taking into account security, power efficiency and adaptivity requirements. Concept Reply used ALOHA framework as a ground base to jumpstart the development activities and create a benchmark for deep learning applied to industrial environment voice interfaces.

Commands Recognition to control a Robotic Arm

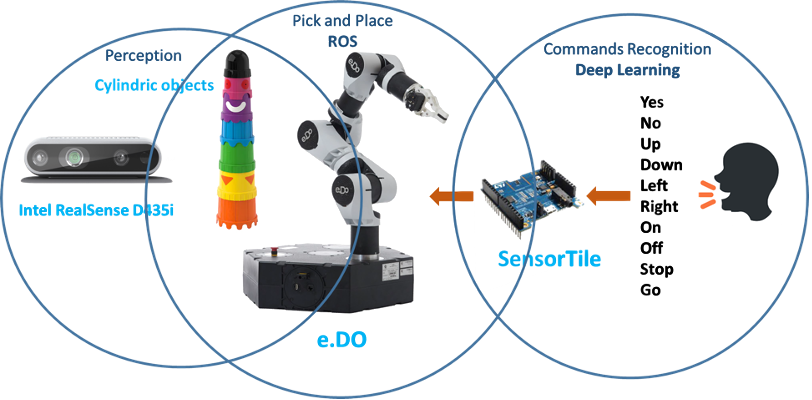

The solution proposed by Concept Reply integrates commands recognition based on deep learning techniques with ROS logic and a perception module to control a robotic arm.

The target hardware for the deployment of the keyword-spotting module is a very tiny IoT module with limited memory footprint and computing resources.

The Concept Reply's solution is based on Convolutional Neural Network models and supports open datasets such as Google Speech Commands. The commands are one-second audio clips, each containing a single English word like 'up', 'down', 'yes', 'no', etc. recorded by thousands of speakers. The models are therefore speaker independent, and do not require further adaptation. It is possible to realize customized implementations including different keywords selected between the available ones and so a different number of classes. Thanks to the introduction of specific classes to identify silence and unknown words, to the definition of preprocessing pipelines and the adoption of adversarial training techniques, the keyword spotting solution is effective also in a real scenario, where noise and other people talking are present, achieving state of the art results.

The robot used is an anthropomorphic arm with spherical wrist, six axes and a gripper. We developed a ROS package in Python that handles the serial communication with the IoT board and controls the robot using ROS Messages or ROS MoveIt. The package offers different modes to control the robot movement: 'poses', 'spatial' and 'joints'. In the 'poses' mode, the robot goes directly to predefined poses corresponding to the speech command received. In the 'spatial' mode, we can move the robot end effector in space using x, y, and z Cartesian coordinates. Finally, in the 'joints' mode, the robot rotates the identified joint by the specified angle. We can then support several use cases, involving precision movements in response to voice commands.

We introduced a vision system based on a stereo camera to take into account the presence of dynamic changes in the robot environment. The perception pipeline allows the recognition of 3D objects from the raw image using the Point Cloud Library and their addition to the scene as collision objects. This enables scenarios such as avoiding obstacles during the execution of the planned path and exploiting pick and place tasks.

REPLY’S VALUE

The solution developed by Concept Reply makes designers' lives easier, allowing them to characterize and deploy an embedded voice recognition system capable of keyword spotting in critical scenarios, such as an industrial application where real-time processing is mandatory to enable fast and safe human-machinery interaction. The deep learning models are customizable to recognize the keywords required by different applications, they don’t require further training from the end-user and they’re comparable with state of the art in terms of accuracy. The ROS package and perception pipeline enable a wide range of collaborative robots and automation scenarios involving precision movements and objects pick and place triggered by vocal commands.